My name is Adam Adli and I am finishing the third year of my undergraduate studies at the University of Toronto specializing in Computer Science. I’m going to start this blog post by talking a little bit about myself. I am a software engineer, an amateur musician, and beyond all, someone who loves to solve problems and treats every creation as art. I have a rather tangled background; I entered university as a life science student, but I have been a programmer since my pre-teen years. Somewhere along the way, I realized that I would flourish most in my computer science courses and so I switched programs in at the beginning of my third year.

While entering this new and uncertain phase in my life and career, I had the opportunity of meeting Dr. Pascal Tyrrell and gaining admission to his research opportunity program (ROP399) course that focused on the application of Machine Learning to Medical Imaging under the Data Science unit of the Department of Medical Imaging.

Working in Dr. Tyrrell’s lab was one of the most unique experiences I have had thus far in university, allowing me to bridge both my interest in medicine and computer science in order to gain valuable research experience. When I first began my journey, despite having a strong practical background in software development I had absolutely no previous exposure to machine learning nor high-performance computing.

As expected, beginning a research project in a field that you have no experience in is frankly not easy. I spent the first few months of the course trying to learn as much about machine learning algorithms and convolutional neural networks as I could; it was like learning to swim in an ocean. Thankfully, I had the support and guidance of my colleagues in the lab and my professor Dr. Tyrrell throughout the way. With their help, I pushed my boundaries and learned the core concepts of machine learning models and their development with solutions to real-world problems in mind. I finally had a thesis for my research.

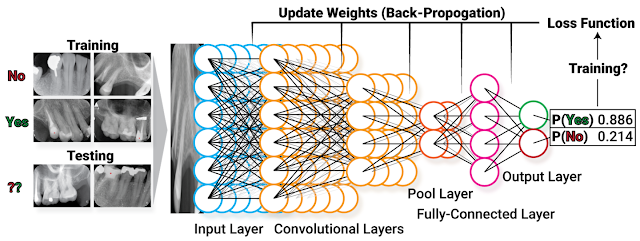

My research thesis was to experimentally show a relationship that was expected in theory: smaller training sets tend to result in over-fitting of a model and regularization helps prevent over-fitting so regularization should be more beneficial for models trained on smaller training sets in comparison to those trained on larger ones. Through late nights of coding and experimentation, I used many repeated long-running computations on a binary classification model for dental x-ray images in order to show that employing L2 regularization is more beneficial for models training on smaller training samples than models training on larger training samples. This is an important finding as often times in the field of medical imaging, it may be difficult to come across large datasets—either due to the bureaucratic processes or financial costs of developing them.

I managed to show that in real-world applications, there is an important trade-off between two resources: computation time and training data. L2 regularization requires hyperparameter tuning which may require repeated model training which may often be very computationally expensive—especially in complex convolutional neural networks trained on large amounts of data. So, due to the diminishing returns of regularization and the increased computational

costs of its employment, I showed that L2 regularization is a feasible procedure to help prevent over-fitting and improve testing accuracy when developing a machine learning model with limited training data.

costs of its employment, I showed that L2 regularization is a feasible procedure to help prevent over-fitting and improve testing accuracy when developing a machine learning model with limited training data.

Due to the long-running nature of the experiment, I tackled my research project as not only a machine learning project but also a high-performance computing project as well. I so happened to be taking some systems courses like CSC367: Parallel Programming and CSC369: Operating Systems at the same time as my ROP399, which allowed me to better appreciate the underlying technical considerations in the development of my experimental

machine learning model. I harnessed powerful technologies like Intel AVX2 vectorization instruction set for things like image pre-processing on the CPU and the Nvidia CUDA runtime environment through PyTorch to accelerate tensor operations using multiple GPUs. Overall, the final run of my experiment took about 25 hours to run even with all the high-level optimizations I considered—even on an insane lab machine with an Intel i7-8700 CPU and an Nvidia GeForce GTX Titan X!

machine learning model. I harnessed powerful technologies like Intel AVX2 vectorization instruction set for things like image pre-processing on the CPU and the Nvidia CUDA runtime environment through PyTorch to accelerate tensor operations using multiple GPUs. Overall, the final run of my experiment took about 25 hours to run even with all the high-level optimizations I considered—even on an insane lab machine with an Intel i7-8700 CPU and an Nvidia GeForce GTX Titan X!

Overall, my ROP not only opened a door to the world of machine learning and high-performance computing for me but in doing so, it taught me so much more. It strengthened my independent learning, project management, and software development skills. It taught me more about myself. I feel that I never experienced so much growth as an academic, problem-solver, and software engineer in such a condensed period of time.

I am proud of all the skills I’ve gained in Dr. Tyrrell’s lab and I am extremely thankful for having received the privilege of working in his lab. He is one of the most supportive professors I have had the pleasure of meeting.

Now that I have completed my third year of school, I’m off to begin my year-long software engineering internship at Intel and continue my journey.

Signing out,

Adam

Adli