Have you ever tried to assemble a Lego set and ended up with mysterious extra pieces? Or perhaps you have cleaned up after a big party and found some confetti hiding in the corners days later? Welcome to the world of “residuals”!

Residuals pop up everywhere. It’s an everyday term but it’s actually fancier than just referring to the leftovers of a meal; it’s also a term used in regression models to describe the difference between observed and predicted values, or in finance to talk about what’s left of an asset. However, nothing I mentioned compares to the role residuals played in machine learning and particularly training deep neural networks.

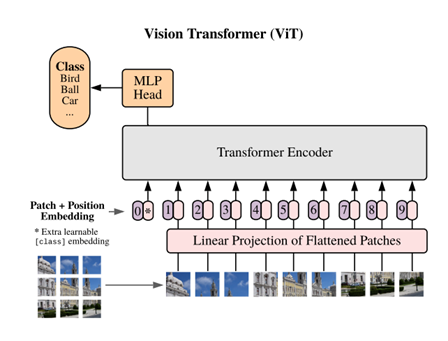

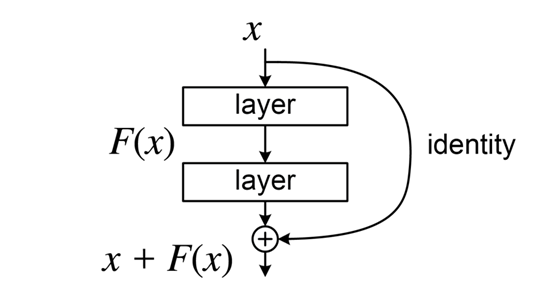

When you learn an approximation of a function from an input space to an output space using backpropagation, the weights are updated based on the learning rate and gradients that are calculated through chain rule. As a neural network gets deeper, you have to multiply a small value—usually much smaller than 1—multiple times to pass it to the earliest layers, making the neural network excessively hard to optimize. This phenomenon prevalent in deep learning is call the vanishing gradient problem.

However, notice how deep layers of a neural network are usually composed by mappings that are close to identity. This is exactly why residual connections do their magic! Suppose your true mapping from input to output is h(x), and let the forward pass be f(x)+x. It follows that the mapping subject to learning would be h(x)-x, which is close to a zero function. This means f(x) would be way easier to learn under the vanishing gradient problem, since functions that are close to zero functions demand a lower level of sensitivity to each parameter, unlike the identity function.

Now before we dive too deep into the wizardry of residuals, should we use residual in a sentence?

Serious: Neuroscientists wanted to explore if CNNs perform similarly to the human brain in visual tasks, and to this end, they simulated the grasp planning using a computational model called the generative residual convolutional neural network.

Less serious: Mom: “What happened?”

Me: “Sorry Mom, but after my attempt to bake chocolate cookies, the residuals were a smoke-filled kitchen and a cookie-shaped piece of charcoal that even the dog wouldn’t eat”

See you in the blogosphere,

Mason Hu