AP? Average Precision! What is it? And how is it useful?

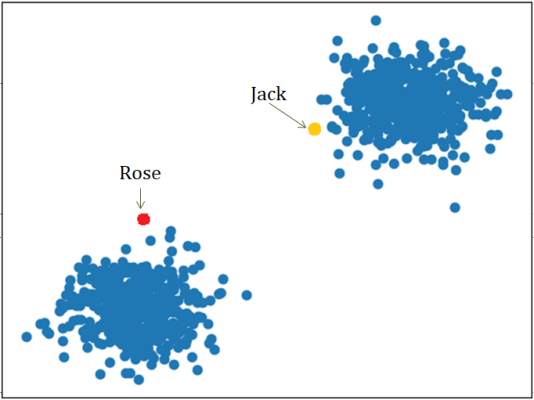

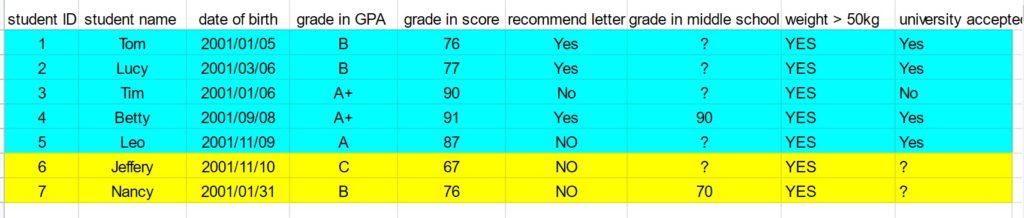

Imagine you are given a prediction model that can identify common objects, and you want to know how well the model performs. So you prepare a picture that contains 2 people, and labels them with bounding boxes in yellow yourself. Then you applied the model on this image, and the model boxes the people in red with different confidence scores. Not bad right? But how can you tell if this prediction is correct?

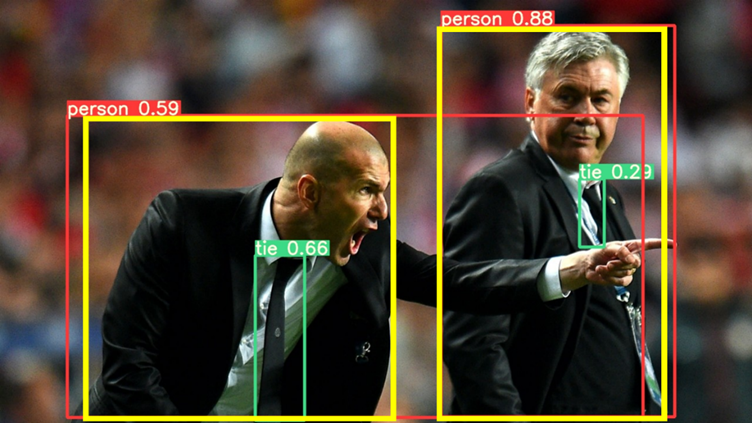

That’s where Intersection of Union (IoU) comes in, the first stop on our journey to AP. Looking at the boxes in the picture, you can see some parts of yellow box and red box overlap. IoU is the proprotion of their overlapping region over the union. For example, the prediction for the person on the left will have smaller IoU than the prediction for the other person.

If we set the cutoff the IoU to be 0.8, then the prediction on the left will be classified as false positive (FP) since it does not reach the threshold, whereas the prediction on the right will be true positive (TP).

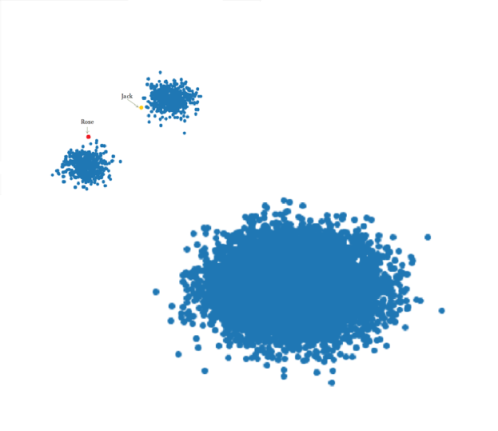

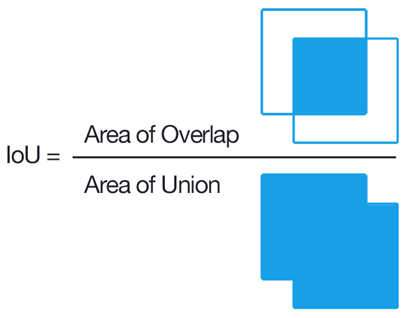

Now final piece before calculating AP. In this image of cats, we labeled 5 cats in red, and predictions are made in yellow. We rank the predictions on descending confidence score, and calculate the precision and recall. Precision is the proportion of TP out of all predictions, and Recall is the proportion of TP out of all ground-truth.

Here is a summary of calculations.

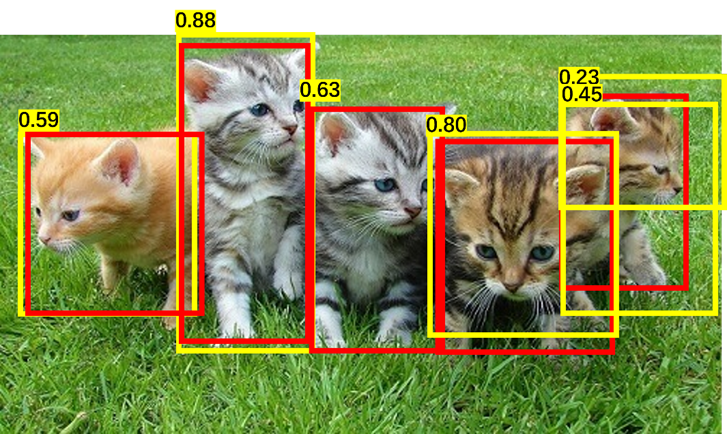

| Rank of predictions | Correct (Y/N) | Precision | Recall |

| 1 | T | 1 | 0.2 |

| 2 | T | 1 | 0.4 |

| 3 | F | 0.67 | 0.4 |

| 4 | T | 0.75 | 0.6 |

| 5 | T | 0.8 | 0.8 |

| 6 | F | 0.67 | 0.8 |

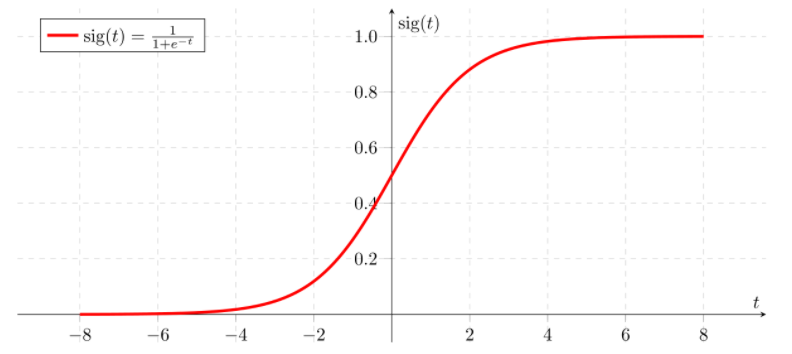

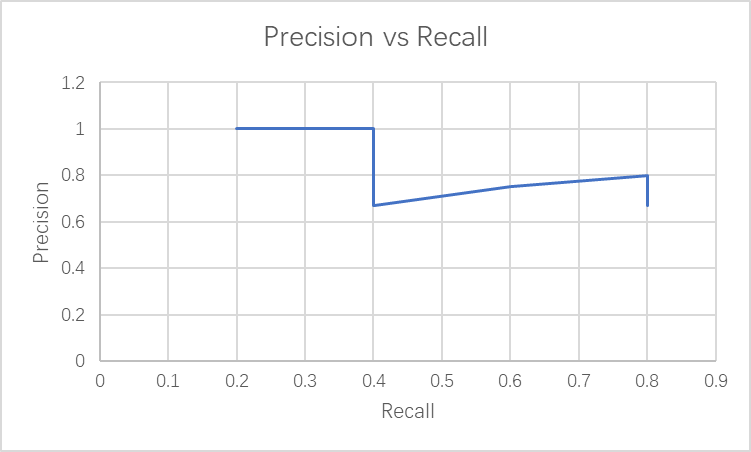

Then we plotted the precicion over recall curve.

Generally as recall increases, the precision decreases. AP is the area under the precision-recall curve! It is from 0 to 1, the higher the better.

Whoa! That’s a complicated definition. Often AP can be calculated directly by the model. Next time you see AP, you know it represents how good your model is.

Now for the fun part, using AP in a sentence by the end of the day:

Serious: AP is a measurement of accuracy in object detection model.

Less serious:

Child: Hey mom! I need some help with the assignment in boxing all the cars on the road.

Mother: Try this model! It has AP of 0.8, and it may be better at this than I do.

…I’ll see you in the blogosphere.

Grace Yu