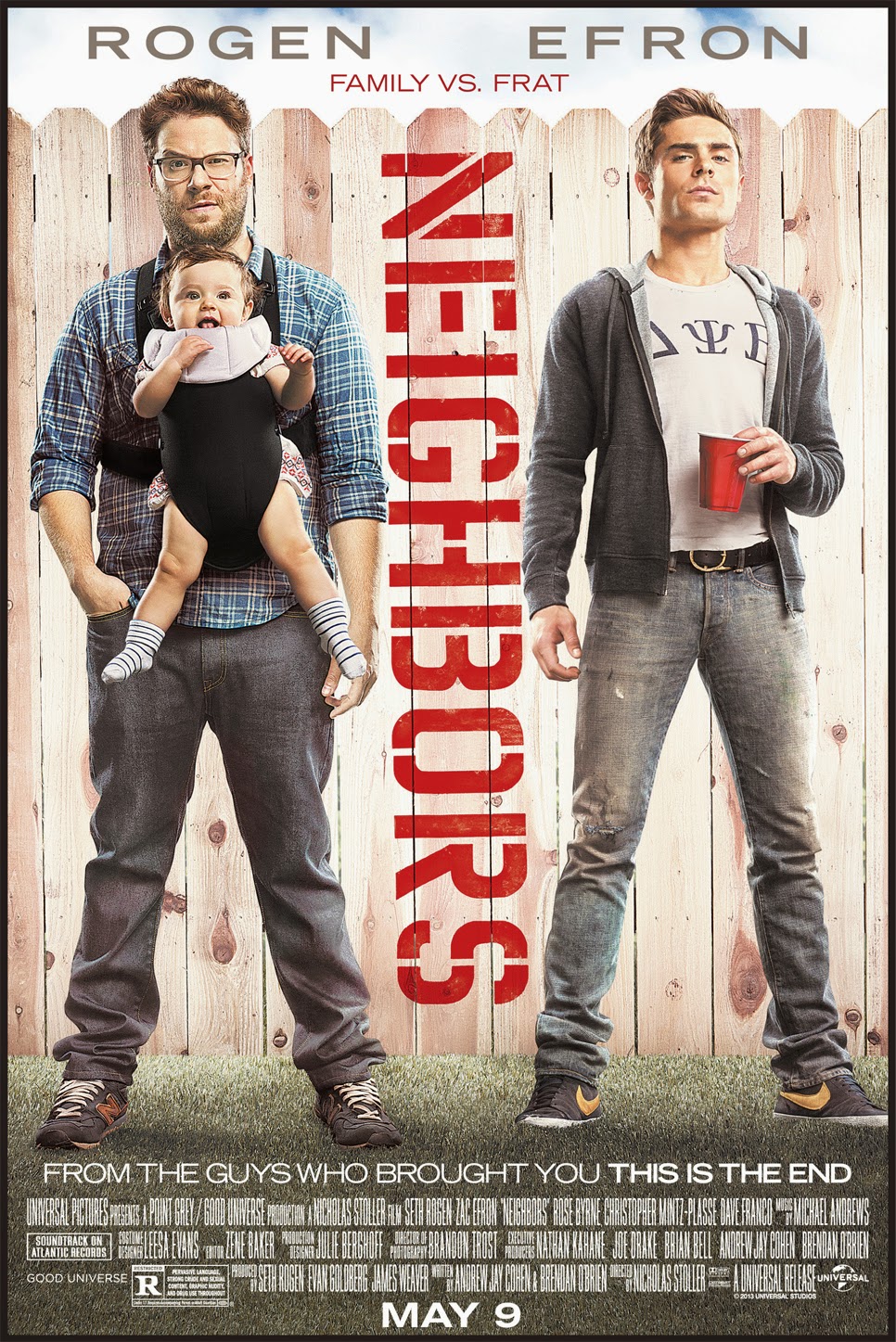

Classic Seth Rogan movie. Today we will be talking about good neighbors as a followup to my first post “What cluster Are You From?“. If you want to learn a little about bad neighbors watch the trailer to the movie Neighbors.

So let’s say you are working with a large amount of data that contains many, many variables of interest. In this situation you are most likely working with a multidimensional model. Multivariate analysis will help you make sense of multidimensional space and is simply defined as a situation when your analysis incorporates more than 1 dependent variable (AKA response or outcome variable).

*** Stats jargon warning***

Mulitvariate analysis can include analysis of data covariance structures to better understand or reduce data dimensions (PCA, Factor Analysis, Correspondence Analysis) or the assignment of observations to groups using a unsupervised methodology (Cluster Analysis) or a supervised methodology (K Nearest Neighbor or K-NN). We will be talking about the later today.

*** Stats-reduced safe return here***

Classification is simply the assignment of previously unseen entities (objects such as records) to a class (or category) as accurately as possible. In our case, you are fortunate to have a training set of entities or objects that have already been labelled or classified and so this methodology is termed “supervised”. Cluster analysis is unsupervised learning and we will talk more about this in a later post.

Let’s say for example you have made a list of all of your friends and labeled each one as “Super Cool”, “Cool”, or “Not cool”. How did you decide? You probably have a bunch of attributes or factors that you considered. If you have many, many attributes this process could be daunting. This is where k nearest neighbor or K-NN comes in. It considers the most similar other items in terms of their attributes, looks at their labels, and gives the unassigned object the majority vote!

This is how it basically works:

1- Defines similarity (or closeness) and then, for a given object, measures how similar are all the labelled objects from your training set. These become the neighbors who each get a vote.

2- Decides on how many neighbors get a vote. This is the k in k-NN.

3- Tallies the votes and voila – a new label!

All of this is fun but will be made much easier using the k-NN algorithm and your trusty computer!

So, now you have an idea about supervised learning technique that will allow you to work with a multidimensional data set. Cool.

Listen to Frank Sinatra‘s The Girl Next Door to decompress and I’ll see you in the blogosphere…

Pascal Tyrrell

Website of Prof. Pascal Tyrrell