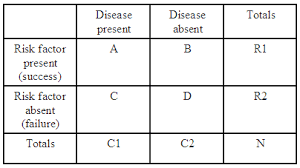

In the final installment of this series, I want to discuss how we can use the Ratios of Risk in a clinical context. To recap, we previously discussed an absolute measure of risk difference (appropriately called the risk difference or RD), as well as a relative measure of risk difference (relative risk or RR).

To see how we can apply these risks, let’s tweak our original example. Let’s assume that smartphone thumb could potentially lead to loss of thumb function (not really, don’t worry!). Let’s also suppose that surgery is a possible treatment for smartphone thumb, and the following results were obtained after a trial.

|

|

Surgery

|

No Surgery (control)

|

Totals

|

|

Retained

thumb function

|

7

|

6

|

13

|

|

Lost thumb function

|

3

|

4

|

7

|

|

Totals

|

10

|

10

|

20

|

The big question is: how good an option is surgery?

Let’s calculate the RD (note that the “risk” here is of losing thumb function): 4/10 – 3/10= 0.1

In other words, there is a 10% greater risk of loosing thumb function if you did not have the surgery. Based on this information alone (or by calculating the RR and OR), we might be quick to conclude that surgery is a great intervention.

But before we do that, let’s calculate another statistic, which will prove to be very useful: it’s called the number needed to treat (or NNT), and is given by 1/RD. The NNT is the number of patients that must be treated for 1 additional patient to derive some benefit (retain an intact and functioning thumb). In our case, NNT = 1/0.1 = 10. So, in order save 1 patient from loosing his thumb, another 9 will have had to undergo surgery with no apparent benefit. As you can see, the NNT sheds a very humbling light on our intervention. The ideal NNT is equal to 1. Beyond that, we must keep in mind that the additional patients undergoing the treatment have been exposed to all the negative side effects, without the intended benefit.

Throughout this series we discussed the meaning of risk, how it can be used for comparison (the various ratios of risk), and finally its application in a clinical setting (the ramifications of risk). After all these posts, smartphone thumb may have started to seem like a very real threat. But I think you should be fine…. as long as you know the risks!

So what’s up with the Dodge Ram ad (I am actually a F150 guy myself)? Well I just thought it went well with ramifications of risk. Cheesy I know. But who knows maybe it will help you to remember…

See you in the blogosphere,

Indranil Balki and Pascal Tyrrell