Hi! My name is Parinita Edke and I’m finishing my third year at UofT, specializing in Computer Science with a minor in Statistics. I did a STA399Y research project with Professor Tyrrell from September 2020 – April 2021 and I am excited to share my experience in the lab!

I have always been interested in medicine and the applications of Computer Science and Statistics to solve problems in the medical field. I was looking out for opportunities to do research in this intersection and was excited when I saw Professor Tyrrell’s ROP posting. I applied prior to the second-round deadline and waited to hear back. After almost 2 weeks past the deadline, I had still not heard back and decided to follow up on the status of my application. I quickly received a reply from Professor Tyrrell that he had already picked his students prior to receiving my application. While this was extremely disappointing, I thanked Professor Tyrrell for his time and expressed that I was still interested in working with him during the year and attached my application package to the email. I was not really expecting anything coming out of this, so I was extremely happy when I received an invite to an interview! After a quick chat with Professor Tyrrell about my goals and fit for the lab, I was accepted as an ROP student!

Soon after being accepted, I joined my first lab meeting where I was quickly lost in the technical machine learning terms, the statistical concepts and the medical imaging terminology used. I ended the meeting determined to really begin understanding what machine learning was all about!

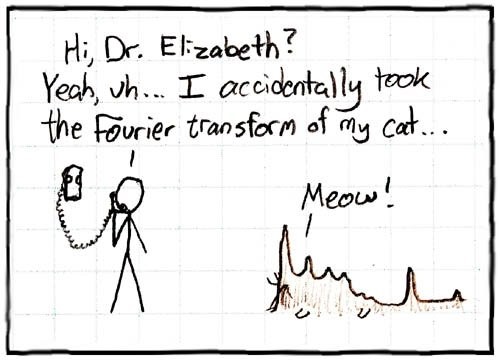

This marked the beginning of the long and challenging journey through my project. When I decided on my project, it seemed interesting as solving the problem allowed for some cool questions to be answered. The task was to detect the presence of blood in ultrasound images of the knee joint; my project was to determine if Fourier Transformation can be used to generate features to perform the task at hand well. It seemed quite straightforward at first – simply generate Fourier Transformed features and run a classification model to get the outputs, right? After completing the project, I am here to tell you that it was far from being straightforward. It was more like a zigzag progress pattern through the project. The first challenge that I faced was understanding the theory behind the Fourier Transform and how it applies to the task at hand. This took me quite some time to fully grasp and was definitely one of the more challenging parts of the project. The next challenge was figuring out the steps and the things I would need for my project. Rajshree, a previous lab member, had done some initial work using a CNN+SVM model. I first tried to replicate what Rajshree had done in order to create a baseline to compare my approach to. It took me some time to understand what each line of code did within Rajshree’s model but after I was able to get it to work, I felt amazing! Reading through Rajshree’s code gave me more experience in understanding the common Python libraries used in machine learning, so when I built my model, it was much quicker! When I ran my model for the first time, I felt incredible! The process was incredibly frustrating at times, but when I saw results for the first time, I felt like all this struggle was worth it. Throughout this process of figuring out the project steps and building the model, Mauro was always there to help, always being enthusiastic when answering any questions I had and giving me encouragement to keep going.

Throughout the process, Professor Tyrrell was always there as well – during our weekly ROP meetings, he always reminded us to think about the big picture of what our projects were about and the objectives we were trying to accomplish. I definitely veered off in the wrong direction at times, but Professor Tyrrell was quick to pull me back and redirect me in the right direction. Without this guidance, I would not have been able to finish and execute the project in the way that I did and am proud of.

Looking back at the year, I am astonished at the number of things I have learned and how much I have grown. Everything that I learned, not only about machine learning, but about writing a research paper, learning from others and your own mistakes, collaborating with others, learning from even more of my own mistakes, and persevering when things get tough will carry with me throughout the rest of my undergraduate studies and the rest of my professional career.

Thank you, Professor Tyrrell, for taking a chance on me. He could have simply passed on my application but the fact that he took a chance with me and accepted me into the course lead to such an invaluable experience for me which I truly appreciate. The experiences and the connections I have made in this lab have been a highlight of my year, and I hope to keep contributing to the lab in the future!

Parinita Edke